Where’s “surprise power over Ethernet” when you need it? Need to blow up the laptop of any cheeky fucker that unplugs one of my remote wifi repeaters to connect their own stuff up…

- 0 Posts

- 37 Comments

As soon as the ball at the end rotates, you’ll get fresh ink again - the amount that dries at the very tip is miniscule. This change dries up the slight detritus that builds up around the tip, too - we used to wipe that off onto your other hand if it was the first bit of writing you were doing that day. But damn, that was a few years ago.

Fortunately, wages have increased to match, right?

Ah yes. I remember preparing a recipe once that included frying up the ingredients in a cup of oil, and that turns out seriously fucking greasy if you use the UK cup size.

Bear in mind that the gallon we use is different from the US gallon, too:

- a UK gallon is eight (imperial) pints of 20 fluid ounces, so 4.54 litres

- a US gallon is 231 cubic inches, so 3.79 litres

The reason that I thought American car fuel economy was so terrible as a child is partly because UK mpg is +20% on US mpg for the same car on the same fuel. But also, because American car fuel economy is so terrible.

21·3 months ago

21·3 months agoThat’s not correct, I’m afraid.

Thermal expansion is proportional to temperature; it’s quite significant for ye olde spinning rust hard drives but the mechanical stress affects all parts in a system. Especially for a gaming machine that’s not run 24/7 - it will experience thermal cycling. Mechanical strength also decreases with increasing temperature, making it worse.

Second law of thermodynamics is that heat only moves spontaneously from hotter to colder. A 60° bath can melt more ice than a 90° cup of coffee - it contains more heat - but it can’t raise the temperature of anything above 60°, which the coffee could. A 350W graphics card at 20° couldn’t raise your room above that temperature, but a 350W graphics card at 90° could do so. (The “runs colder” card would presumably have big fans to move the heat away.)

5·3 months ago

5·3 months agoTell me about it. The numbers that I’m interested in - “decibels under full load”, “temperature at full load” - might as well not exist. Will I be able to hear myself think when I’m using this component for work? Will this GPU cook all of my hard drives, or can it vent the heat out the back sufficiently?

Just sing it! Couple of extra syllables in fee-ee-ling, you’re right back on the beat.

IVEBEENUSINGTHISKEYBORDFORWHOLEMONTHNDMMOREEFFICIENTTHNIVEEVERBEENBEFORE

No ‘a’, so it’s perfect for ordering some piss.

It’s a language essential! Dick, willy, cock, penis, shaft, manhood, todger, pole, …

I feel that ‘gender’ is probably a misleading term for the languages that have ‘grammatical gender’, it rarely has anything to do with genitalia. ‘Noun class’, where adjectives have to decline to agree with the class would fit better in most cases.

English essentially does not have decline adjectives, except for historical outliers like blond/e where no-one much cares if you don’t bother, and uses his / hers / its / erc using a very predictable rule. So no ‘grammatical gender’.

For the love of God, Montresor!

6·5 months ago

6·5 months ago

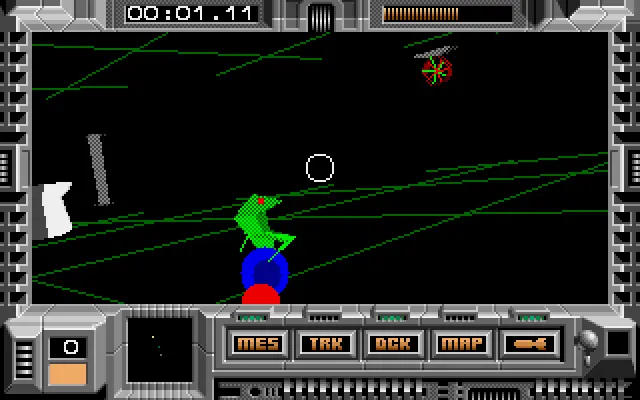

Oh yeah. Partying like its 1989 and I’ve booted up my Amiga. Let’s get some unicycling friends in here and do some hacking in 3D.

It’s been a perpetual source of surprise to me that curry houses are so ‘non-specific’. Pakistan and India together make about 1.7 billion people, about a third of the planet’s population, and I’d have thought an easy way to distinguish a restaurant would be to offer something more region-specific, but it’s fairly rare.

Here in the UK, the majority of curry houses are Bangladeshi - used to be the vast majority, now it’s more like 2/3rds. We’ve a couple of ‘more specific’ chains - both Bundobust and Dishoom do Mumbai-style, and they’re both fantastic - and there’s a few places that do well with the ‘naturally vegan’ cuisines, but mostly you can go in to a restaurant and expect the usual suspects will be on the menu.

Same goes for Chinese restaurants - I don’t believe that a billion people all eat the same food, it’s too big a place for the same ingredients to be in season all the time. Why are they not more specific, more often?

PS3 most certainly had a separate GPU - was based on the GeForce 7800GTX. Console GPUs tend to be a little faster than their desktop equivalents, as they share the same memory. Rather than the CPU having to send eg. model updates across a bus to update what the GPU is going to draw in the next frame, it can change the values directly in the GPU memory. And of course, the CPU can read the GPU framebuffer and make tweaks to it - that’s incredibly slow on desktop PCs, but console games can do things like tone mapping whenever they like, and it’s been a big problem for the RPCS3 developers to make that kind of thing run quickly.

The cell cores are a bit more like the ‘tensor’ cores that you’d get on an AI CPU than a full-blown CPU core. They can’t speak to the RAM directly, just exchange data between themselves - the CPU needs to copy data in and out of them in order to get things in and out, and also to schedule any jobs that must run on them, they can’t do it themselves. They’re also a lot more limited in what they can do than a main CPU core, but they are very very fast at what they can do.

If you are doing the kind of calculations where you’ve a small amount of data that needs a lot of repetitive maths done on it, they’re ideal. Bitcoin mining or crypto breaking for instance - set them up, let them go, check in on them occasionally. The main CPU acts as an orchestrator, keeping all the cell cores filled up with work to do and processing the end results. But if that’s not what you’re trying to do, then they’re borderline useless, and that’s a problem for the PS3, because most of its processing power is tied up in those cores.

Some games have a somewhat predictable workload where offloading makes sense. Got some particle effects - some smoke where you need to do some complicated fluid-and-gravity simulations before copying the end result to the GPU? Maybe your main villain has a very dramatic cape that they like to twirl, and you need to run the simulation on that separately from everything else that you’re doing? Problem is, working out what you can and can’t offload is a massive pain in the ass; it requires a lot of developer time to optimise, when really you’d want the design team implementing that kind of thing; and slightly newer GPUs are a lot more programmable and can do the simpler versions of that kind of calculation both faster and much more in parallel.

The Cell processor turned out to be an evolutionary dead end. The resources needed to work on it (expensive developer time) just didn’t really make sense for a gaming machine. The things that it was better at, are things that it just wasn’t quite good enough at - modern GPUs are Bitcoin monsters, far exceeding what the cell can do, and if you’re really serious about crypto breaking then you probably have your own ASICs. Lots of identical, fast CPU cores are what developers want to work on - it’s much easier to reason about.

Have given up on reading Baalbuddy now that Nitter is dead :-( Only thing I want to check on that whole damn platform, too…

Varies even within a language. El ordenador in Iberian Spanish, la computadora in Latin America.

Joining up your pentagram clockwise? And that one’s not really large enough to stand in the centre of, not you’ve inscribed your blasphemous sacrament in Enochian anyway. Summoning circle for small children, maybe, not someone in their 30s. Amateurs.